This is the second Frozen Futures post in the series on the Cryonics Survey of 2022. The first post dealt with numerous demographic and viewpoint-related questions, but I purposefully avoided getting into the deeper philosophical survey questions in that post.

By contrast, this post is going to focus on those philosophical and related matters exclusively. If you're curious about Cryonicists' beliefs regarding the mind, consciousness, existence versus nonexistence, or life versus non-life, then this post is for you.

A few notes:

Sometimes the questions were a little wordy. If the question is longer, I’ll write it in italics and I’ll write a shorthand above the italics as a title for the question.

You might notice a couple of different styles for each chart type. Sometimes the answers got a little wordy. In those cases, I used a different view to display the data as best as possible.

You’ll notice there is a fair bit of overlap across these questions. That’s because, according to the research on asking philosophical questions like this, it’s important to ask these sorts of questions in different ways using different wording and contexts in order to have any confidence in the results.

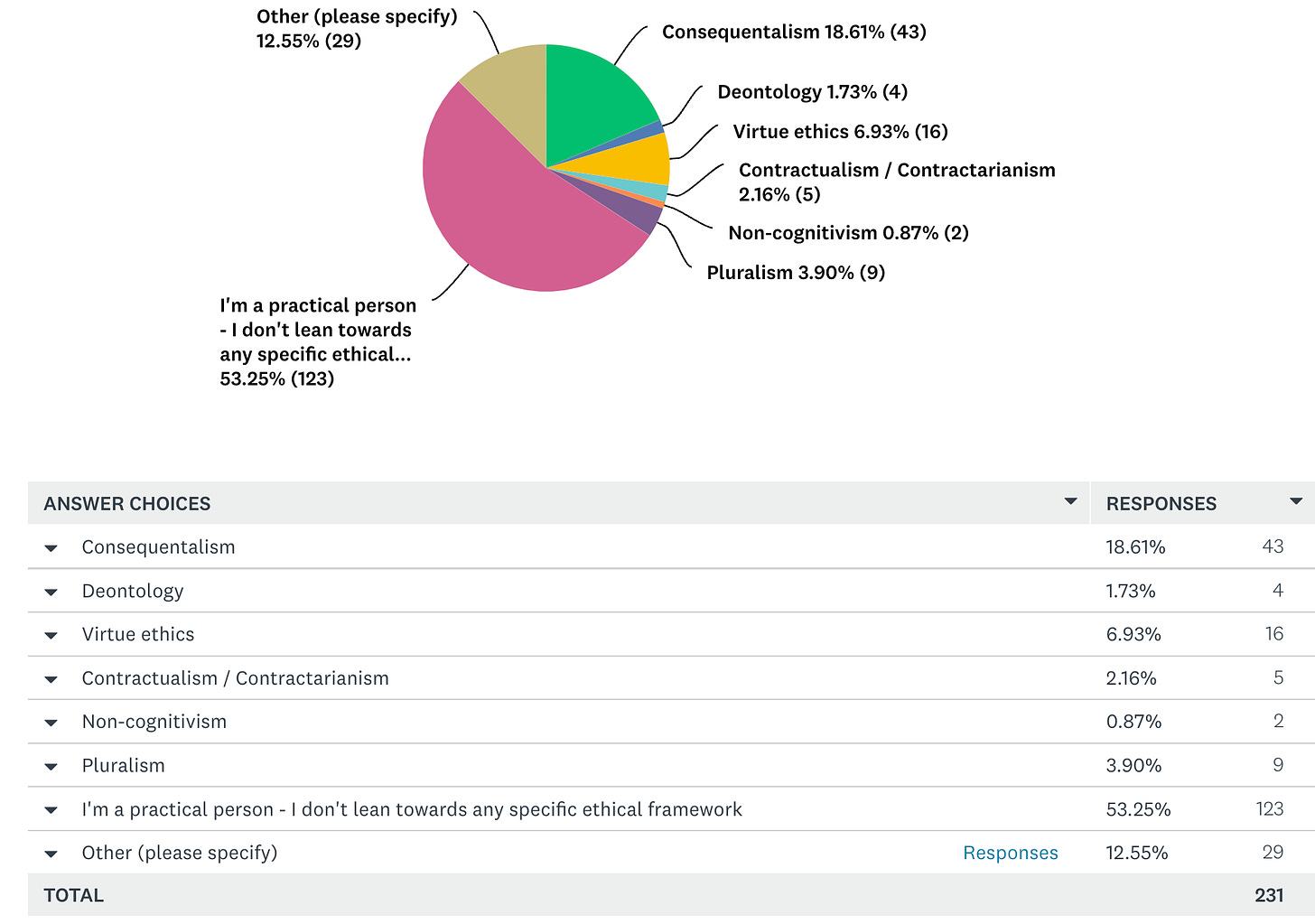

Question 26 - Ethics

“My ethical system can best be described as”

This (and question 64) are the only philosophical questions in this post that don’t touch on “Theory of Mind”, “Consciousness”, or “The Simulation Hypothesis”.

I’d wager that most people who answered “I don’t lean towards any specific ethical framework” (the 53%) haven’t studied philosophy in a structured way, but I’m sure there will be those who have studied philosophy formally and will provide me with numerous reasons why that answer is the careful and nuanced philosophical position.

For what it’s worth, even many people who have studied or even taught ethics would say that they don’t subscribe to any specific ethical framework, or they would say that they bounce between the different frameworks in different contexts.

Consequentialism can be thought of as the ethical framework behind the rationalist movement, effective altruism, the economic way of thinking, etc. Deontology is more closely associated with the legal system (mostly), religion, and the constitution (mostly).

Note: A few commenters pointed out that I subtly included a meta-ethical framework in the previous answers. To them, I admit - guilty as charged, that was an oversight; not a can of worms I was looking to get into here. Instead, I should’ve asked who subscribes to the contractualism / social contract theory.

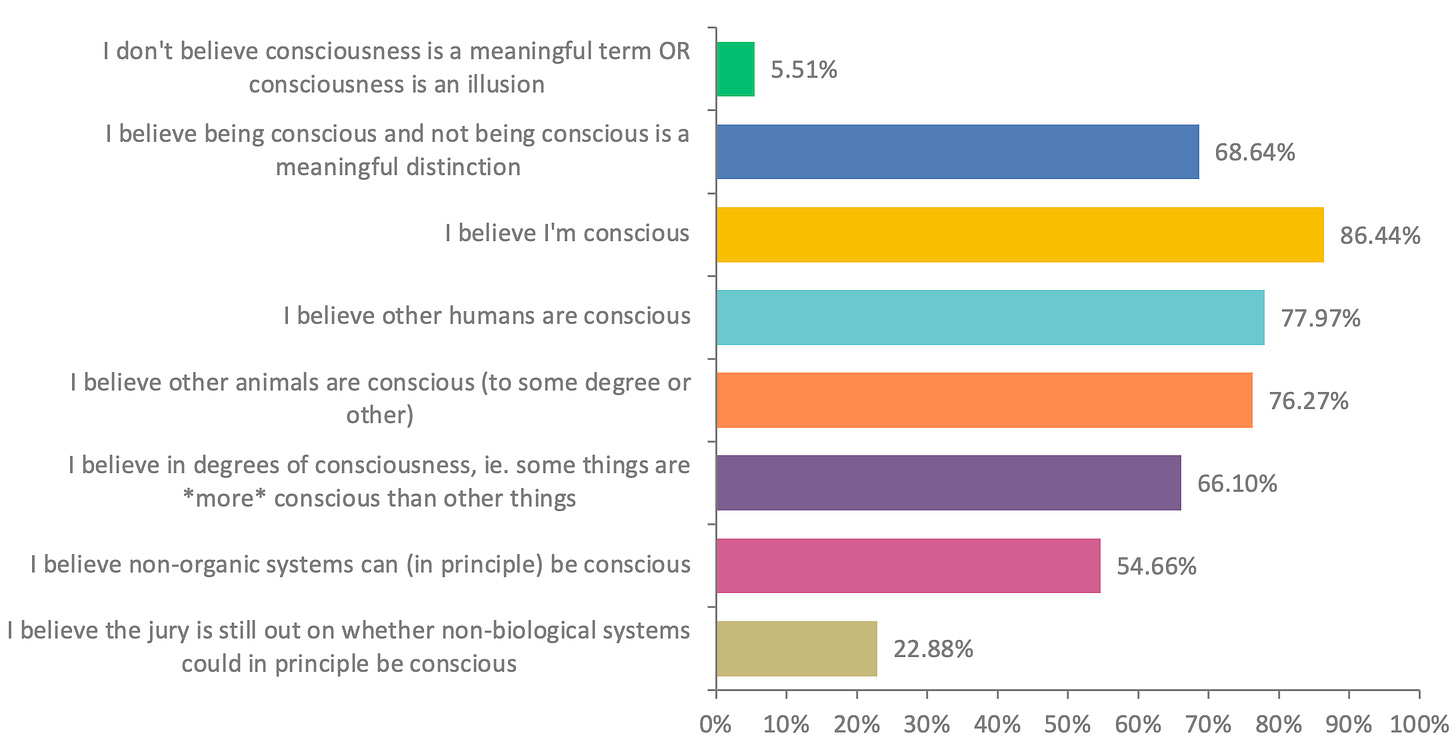

Question 27 - Views on Consciousness

There’s a fairly new field called “Experimental Philosophy” which seeks to understand what sorts of views normal people hold on questions like this. From my cursory overview of the subject, it seems peoples’ responses to philosophical questions are generally… very contextual. Most people do not have clear conceptions around this stuff, and most people give answers that vary greatly depending on context.

That said, we Cryonicists are dragged into the philosophical jungle on these issues because they really could end up mattering! Take, for example, the matter of non-organic consciousness, about ~45% of respondents to this question do not believe that non-organic systems can (even in principle) be conscious. While the word “organic” can be defined in a few different ways (chemists would say it’s anything containing carbon), in this context, it’s safe to say it has something to do with cells, genetic material, metabolism, homeostasis, etc. In other words, not a piece of silicon. We’ll come back to this matter later.

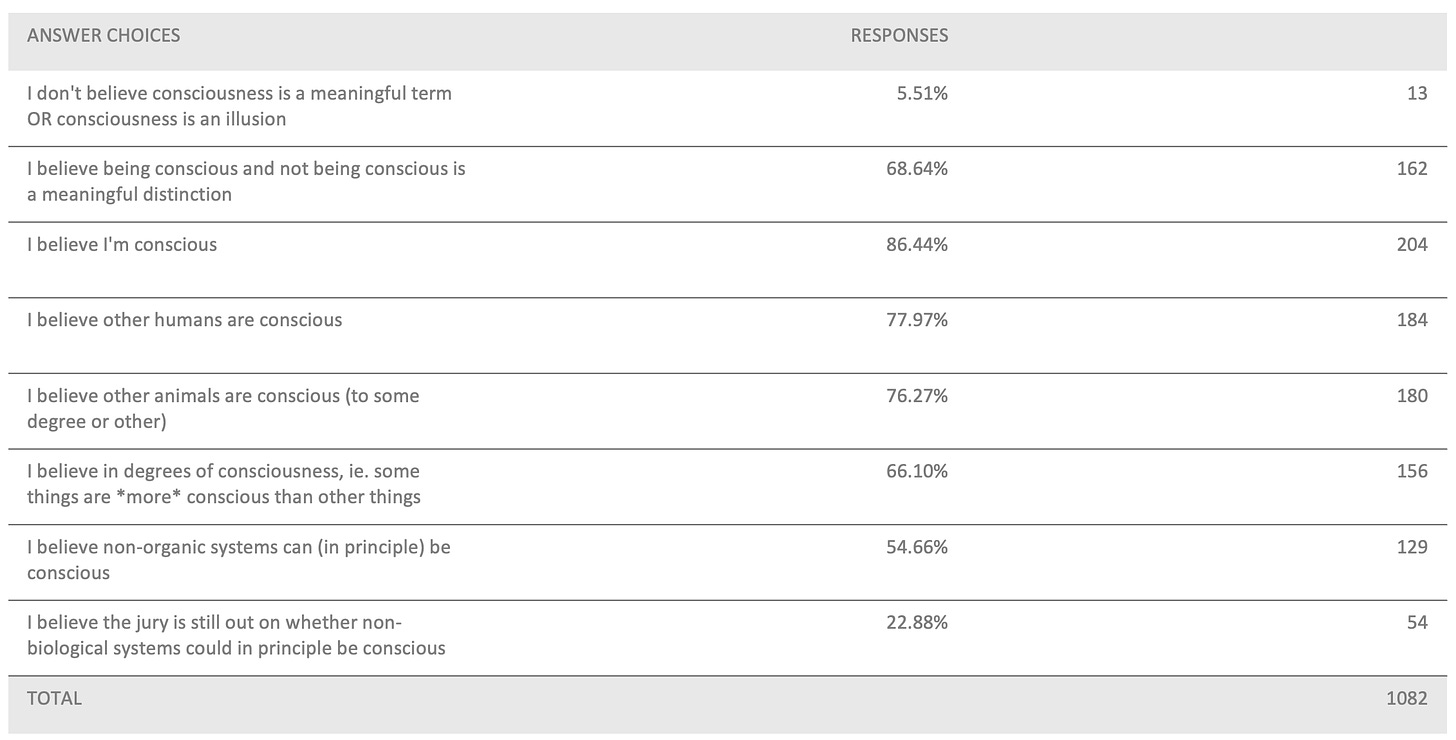

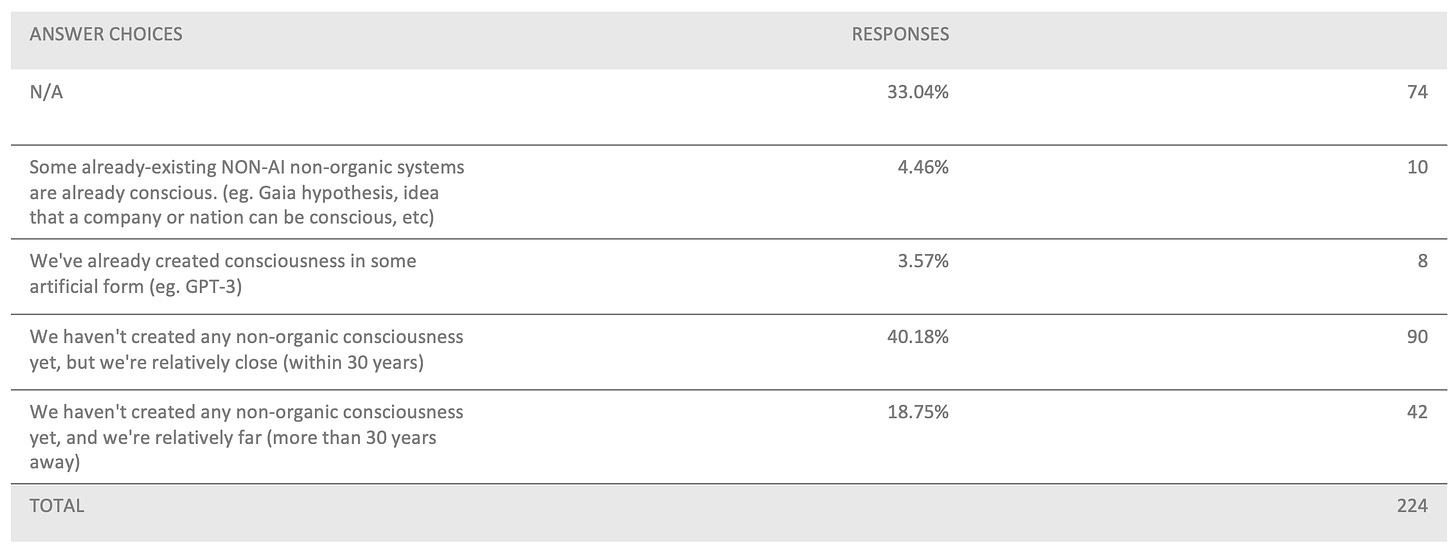

Question 28 What can be conscious?

“If you answered ‘yes’ that non-organic system can, in principle, be conscious, do you also believe that”

Among those who believe non-organic systems can achieve consciousness, the majority believe we haven't quite reached that point yet, but we're close.

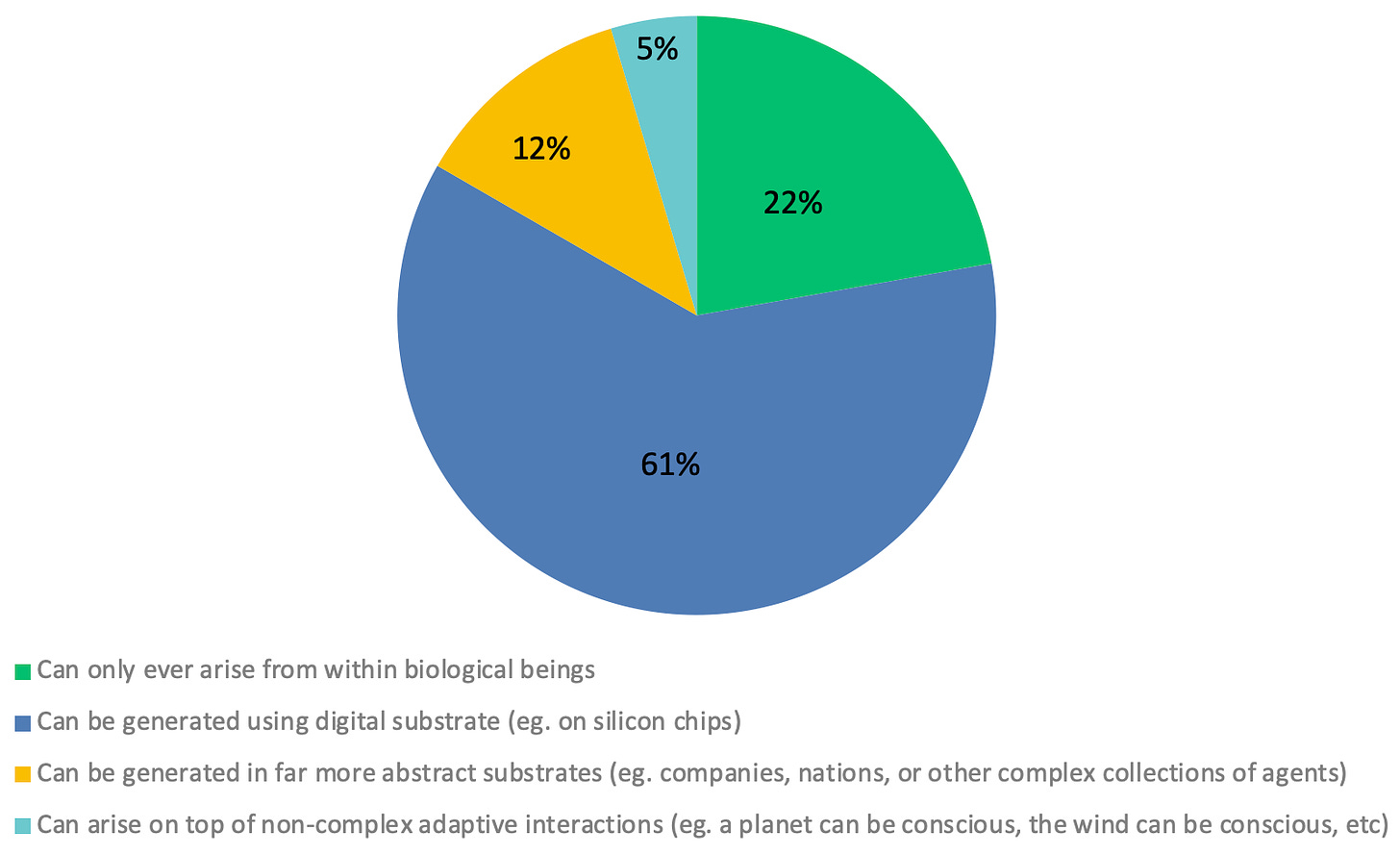

Question 29 - Where can “consciousness” come from?

Another question that dug into peoples’ beliefs on what consciousness is and where it could come from. The answers here shift slightly based on a somewhat different wording.

In this case, ~60% of people said you can create consciousness on silicon, which is fairly close to the 55% of folks that believed consciousness didn’t require organic “stuff” as a substrate.

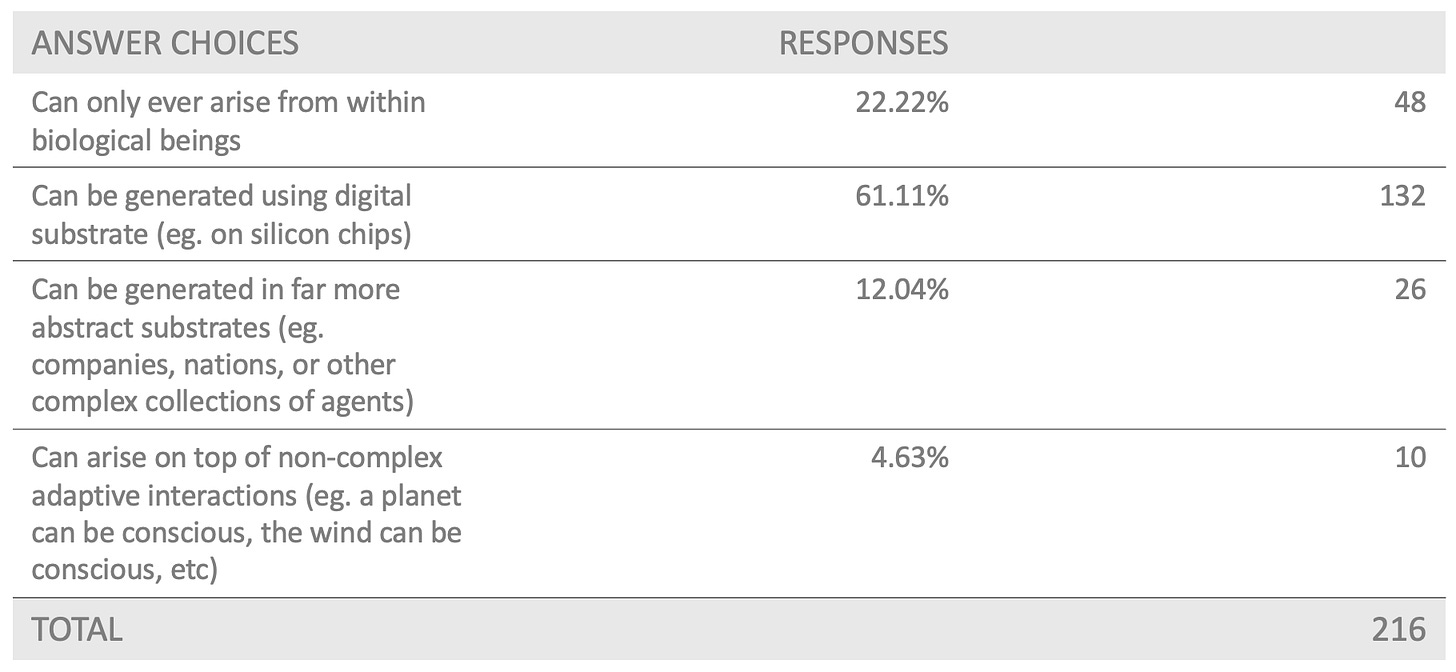

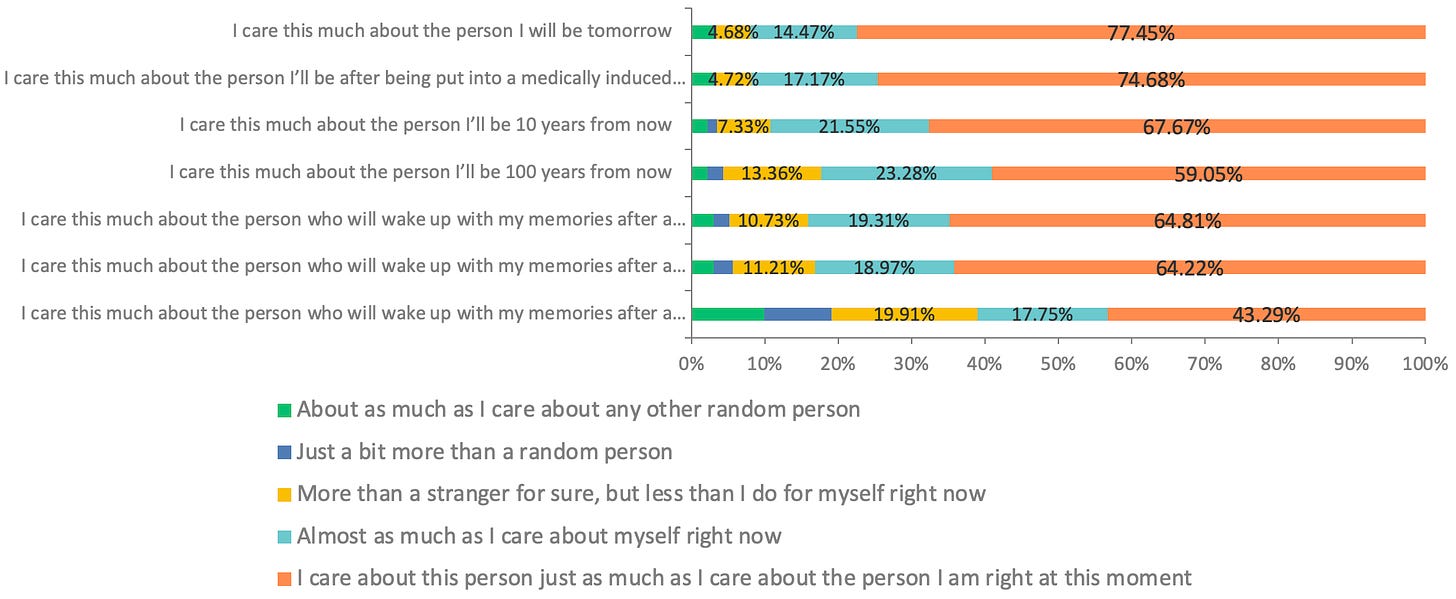

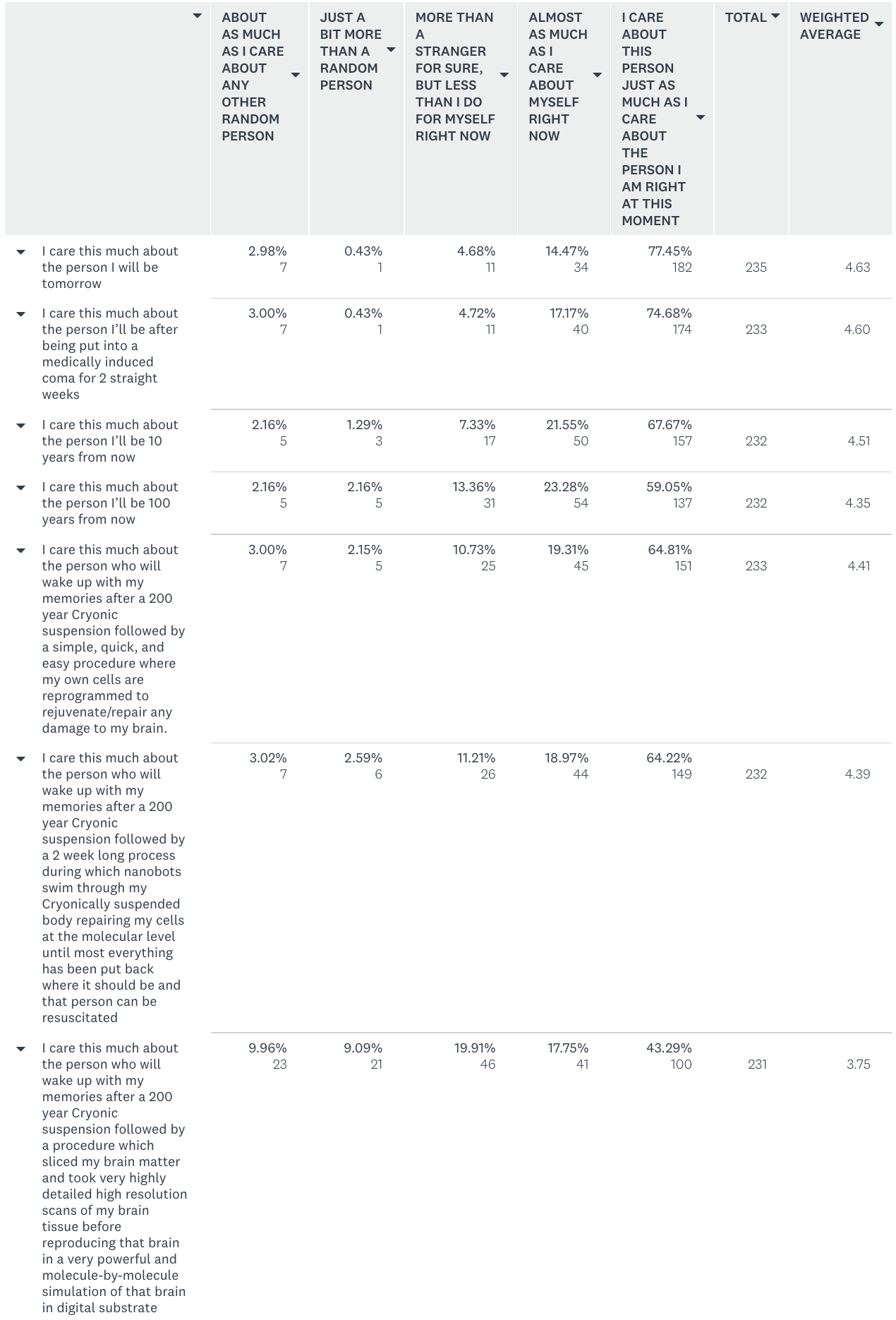

Question 30 - Views on later versions of “you”.

For the following questions, please indicate how much you would care about this person in comparison to the person you are right now.

Typically, our level of 'care' for others increases the closer they are to us across various dimensions, such as time, familial connections, identity, and so forth. In this case, we’re examining how much respondents will care about themselves under various scenarios that seem to make “that person” more “distant” along these various dimensions. An apt analogy here might be the concept of a 'discount rate' in economics. People will typically care less about things if they are given them in the future vs if they’re given to them now (even when controlling for the risk that you don’t end up getting the thing).

The aim of this question was to gauge how much individuals 'discount' versions of themselves that are not only distant in time but potentially distant in other ways… such as being simulated on a computer.

This is a a messy chart and some messy data. To simplify, you can look over at the “weighted average” on the rightmost column. The numbers are going down, as one would expect.

To me, the most interesting observation here is that the numbers don’t go down as quickly as I had expected. A full 43% of respondents claim to care just as much about the person they’ll be in 200 years after being destructively mind-uploaded as the person they are today - right now. This is baffling to me - does this mean all of those folks have an effective discount rate of zero? Does it mean those respondents would be perfectly happy taking a 1% chance of death today as they would taking a 1% chance of that simulated person dying in 200 years? Seriously - if you took the survey and you’re in the 43%, I’d appreciate your insights on this in the comments or on the discord or wherever else.

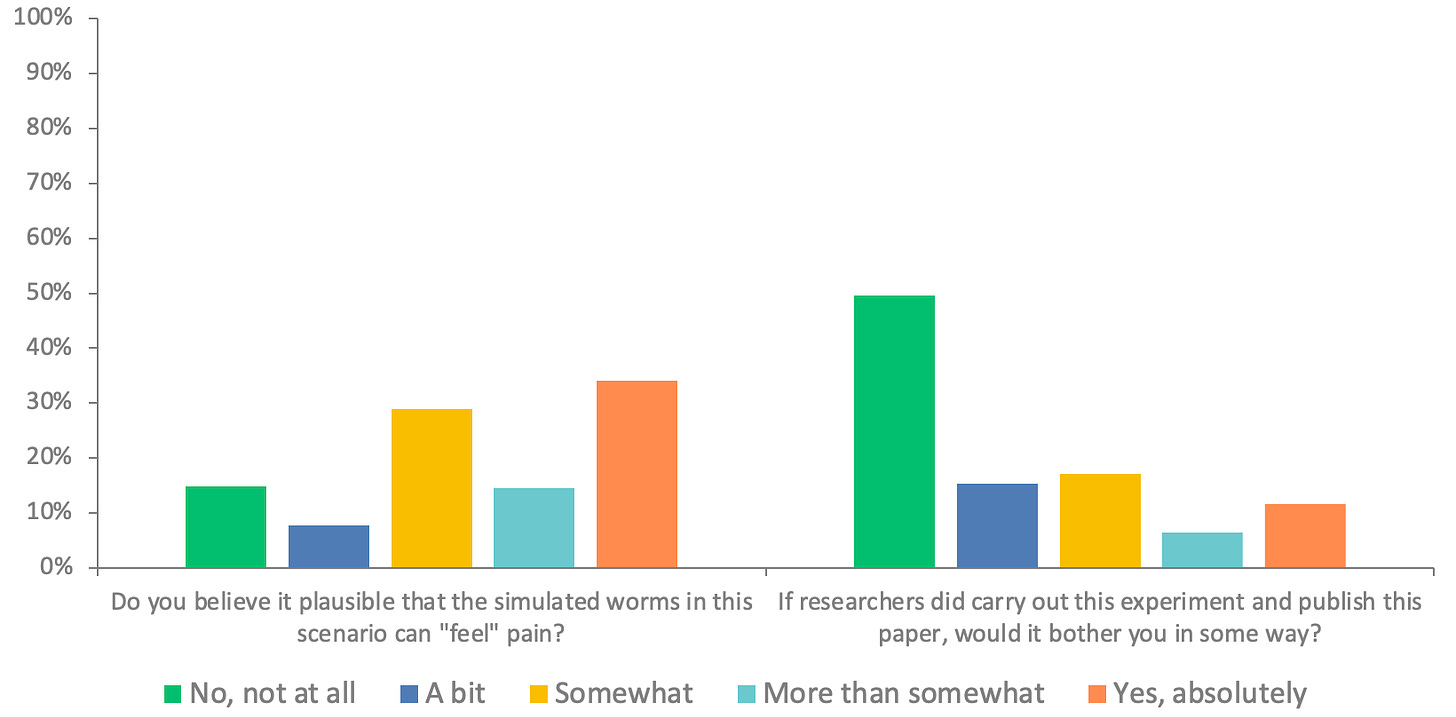

Question 31 - Caring about simulated pain and simulated life

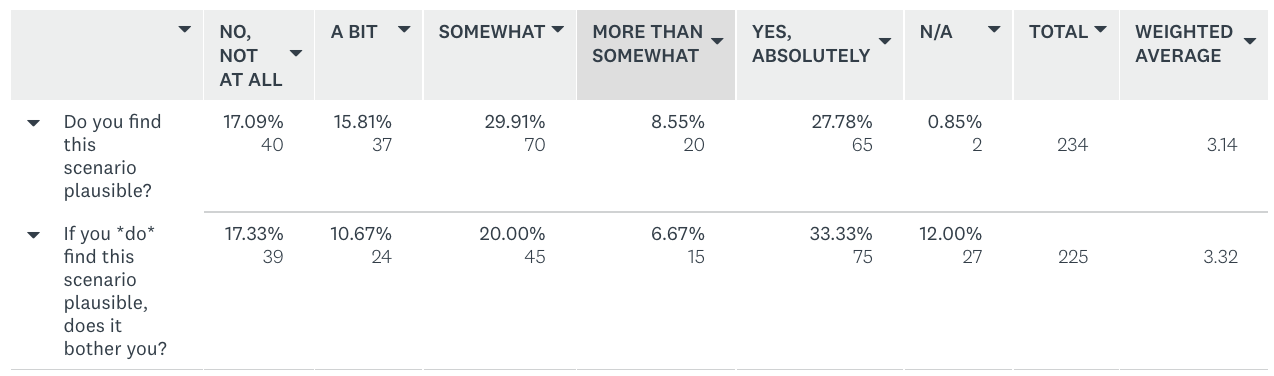

A team of researchers from Stanford published research in a reputable scientific peer-reviewed journal that they have created simulated worm brains that can *feel* pain. This worm-program runs on a super-computer, and researchers claim they're simulating these brains with as much fidelity as real worm-brains. To date, they have managed to replicate this experiment with as many as 30 thousand simulated worm brains to validate their results.

This was really two questions in one. First - do you believe it’s plausible that “simulated worms” can exist at all, and second, can those “simulated worms” feel pain? It’s usually not a good idea to ask two questions in one like this on a survey, but I still find the results here informative, primarily because about 50% of respondents were highly or completely convinced that such an experiment is plausible. For the people who were at the “no, not at all plausible” end of the spectrum, it would’ve been helpful to ask them why they felt this way.

For the second portion of this question, I should’ve asked why respondents were bothered, if they were bothered, since there are at least two distinct ways that respondents could’ve been bothered: one is because the researchers are causing needless suffering, the other is because the researchers are creating and deleting life at-scale mindlessly. That said, 50% of respondents weren’t bothered one bit by either of these potential issues.

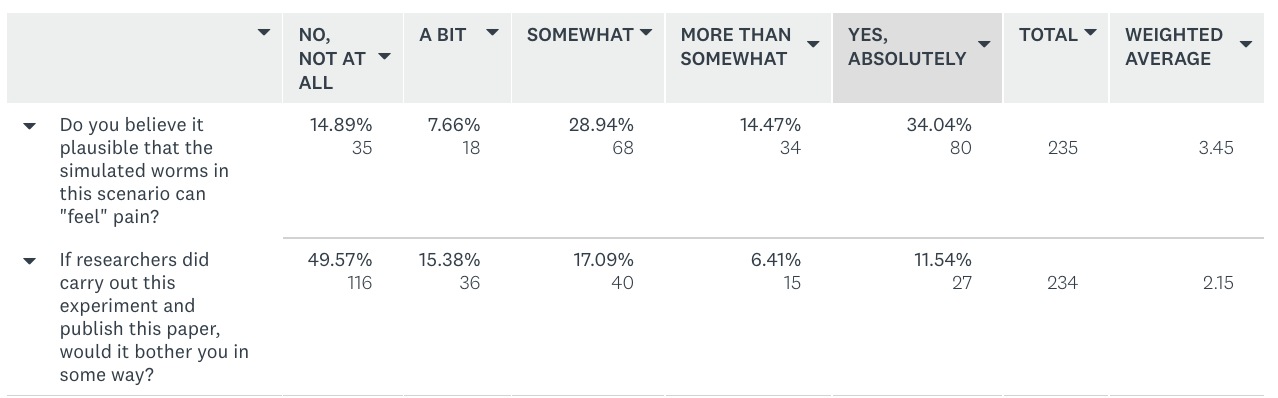

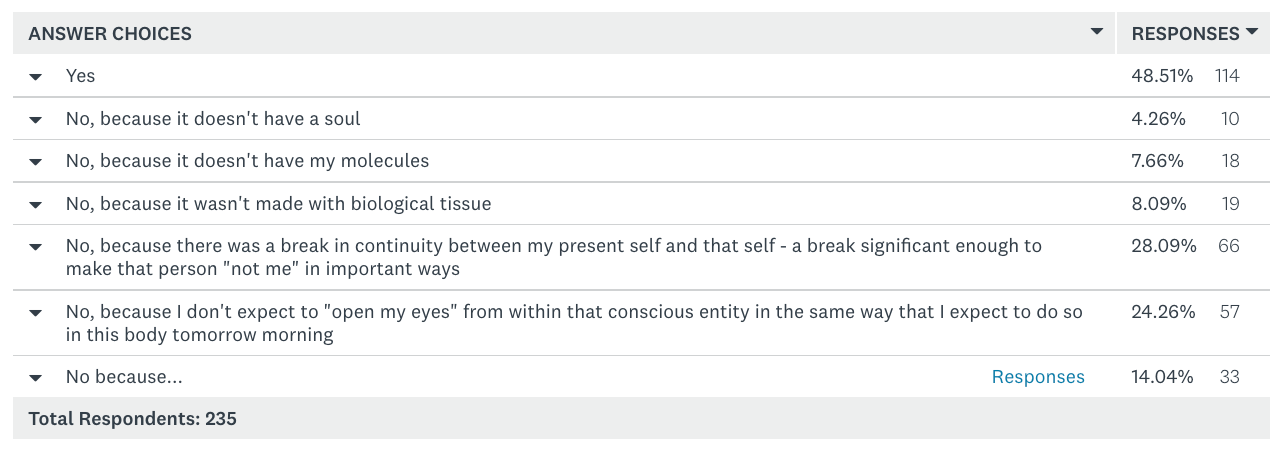

Question 32 - Do you care about “copies” of “you”?

You wake up from Cryonic suspension in 250 years. You’ve been rejuvenated using a form of nanotech. You’re told you’re not living in a simulation. However, you are told that a very precise high-resolution non-destructive scan was taken of your brain while you were in biostasis - and they are using that simulated mind to perform numerous menial tasks such as running the systems on probes sent to other solar systems, drones, control systems, other such things.

This is another one where follow-up “why” questions would’ve been useful. Did people think this scenario wasn’t plausible because it doesn’t make economic/practical sense to have copies of them doing these tasks (eg. they think pure AI would be better at flying probe-drones around the solar system), or do people think this isn’t plausible because simulating their consciousness in this way isn’t plausible?

Opinions on the topic were quite heavily divided. Of respondents who did find this scenario plausible, 1/3 of them were very bothered by the idea that this could be happening to copies of them. Would they be just as bothered if it involved copies of someone else? We’ll have to wait for a future survey to know.

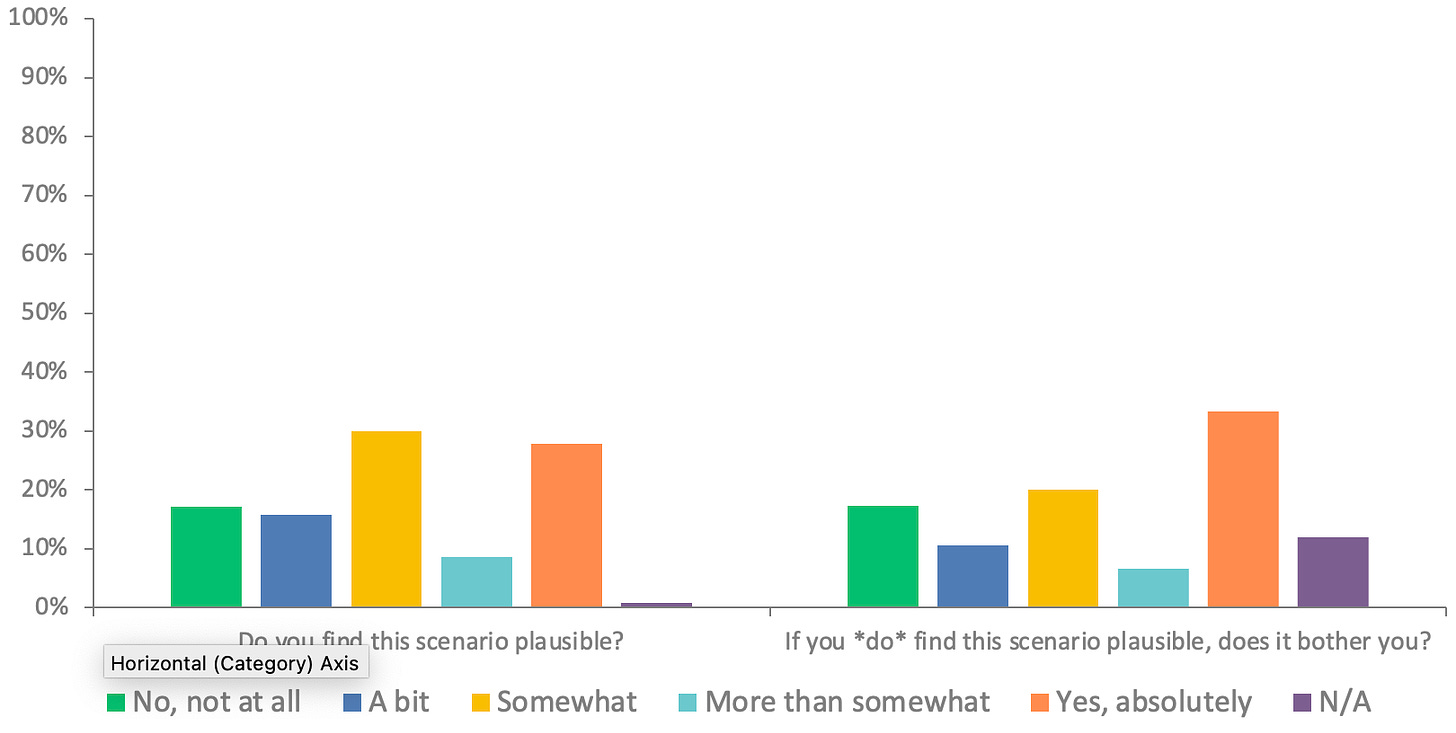

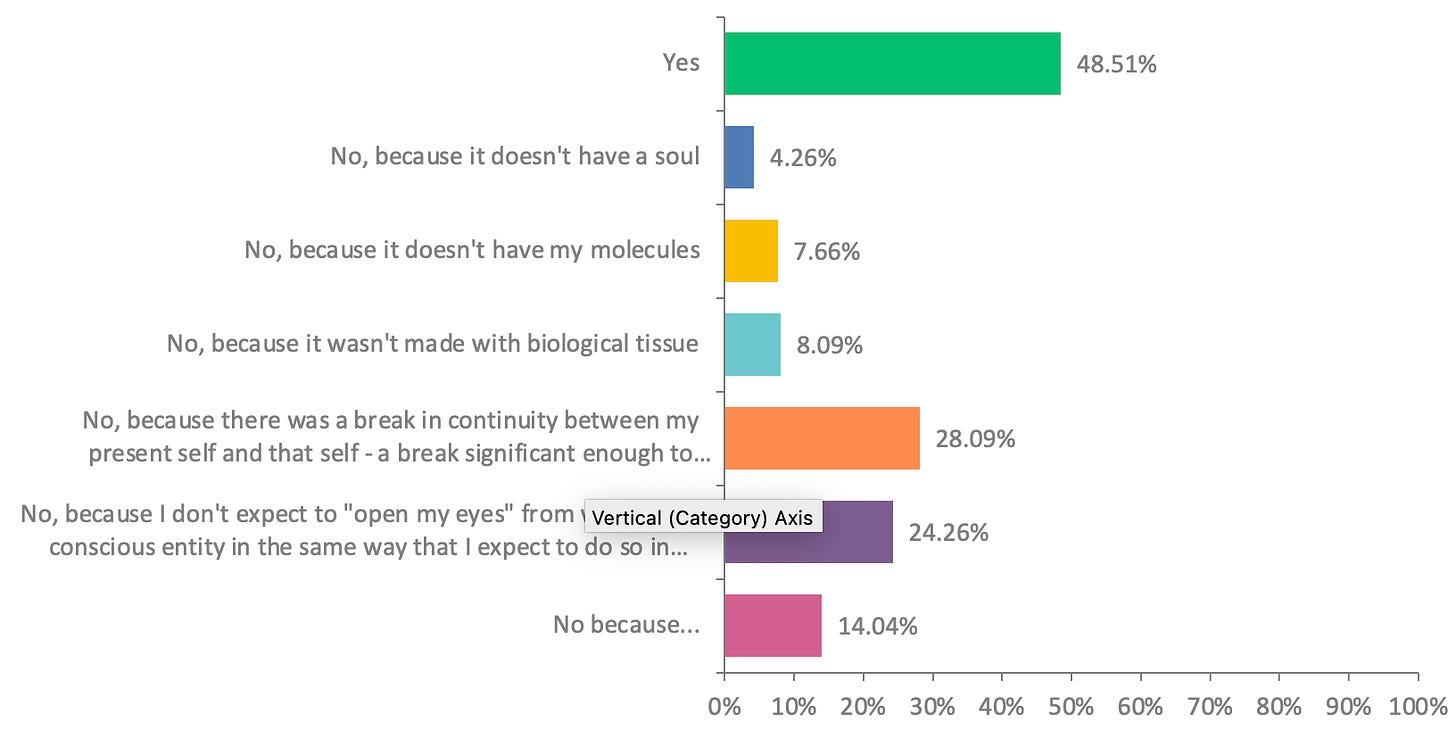

Question 33 - “Simulated you” vs “you”

If your brain is destructively scanned and a simulated digital copy of that scan is run on a very sophisticated computer in the future - is that person "you" in the ways that you feel matter?

Alright, the moment we’ve all been waiting for. The direct “what do you think about uploading” question. So far, a lot of the questions have hinted at this sort of breakdown, but this one is the clearest example in this portion of the dataset:

—> About 50% of respondents think destructive uploading is them in all the ways that matter.

That’s big! 50%! Half of the people you’'ll likely meet in this community will have one view and half of them will have the other view. That’s fine, but what bothers me about this is that most people whom I’ve met, on either side of this debate, seem to believe that people who hold the other viewpoint are delusional, credulous, unsophisticated, and might as well believe in the tooth fairy. In my experience, people on either side can’t imagine the other point of view, or they think the opposing view is so ridiculous that it’s not worth discussing. I hope I’m not the only one that finds this both bizarre and fascinating.

In my experience, the rationalist or “rationalist adjacent” tend to have the pro-uploading perspective. People who aren’t in those camps tend to have the anti-uploading perspective, or are at least worried about it enough to opt against it. I’ve had conversations with both Robin Hanson and Scott Alexander in person on this subject, and both of them thought the anti-uploading position was so silly as to be beneath them to debate.

Anyway, food for thought. This question was, for me, a significant motivator for conducting this survey in the first place - so I see this result as something of a vindication on my prior belief that this issue really does cleve the community in two equal halves.

We’ll now move beyond the purely philosophical questions and look at how Cryonicists view the death, dying, and existence itself.

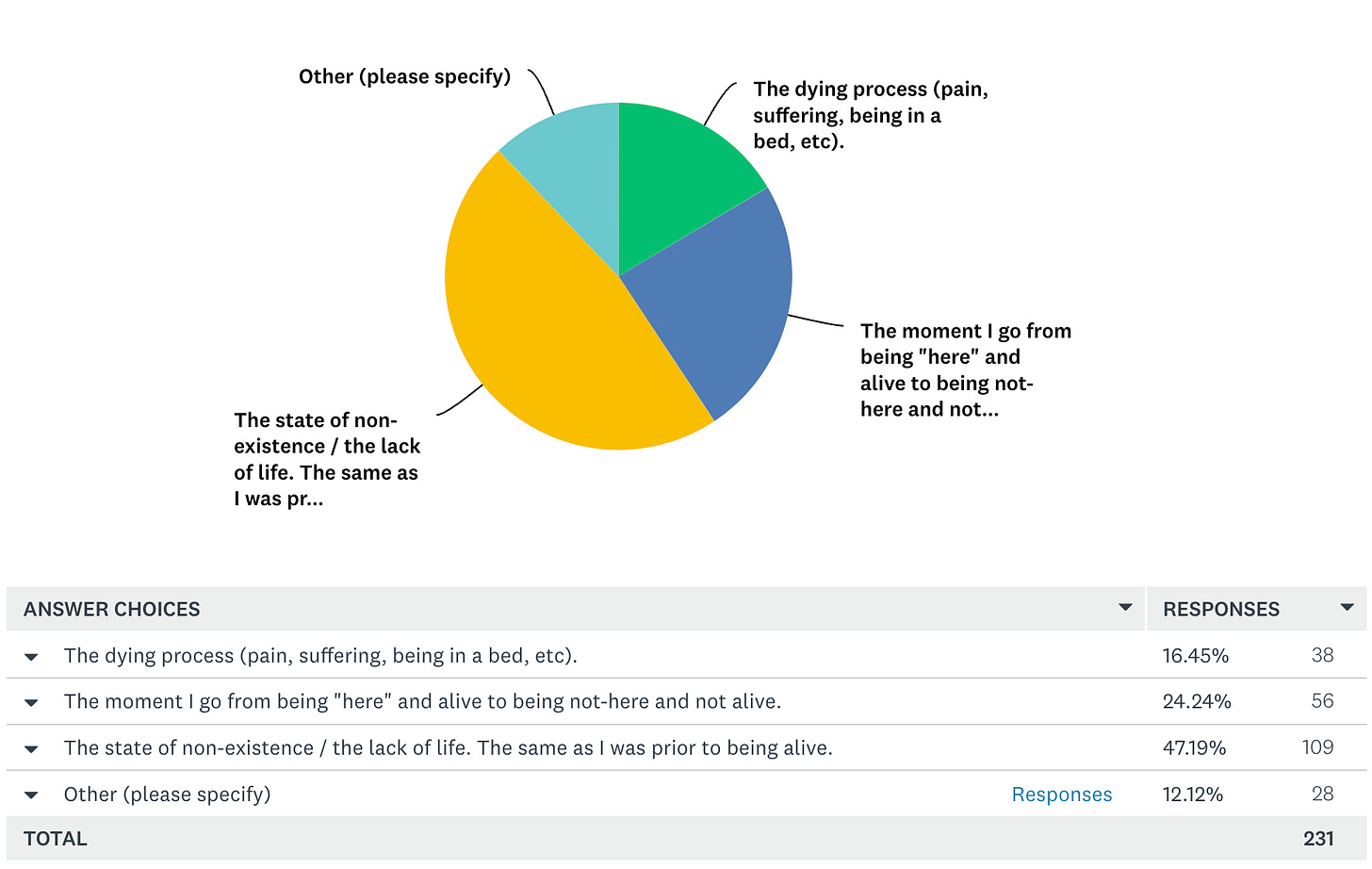

Question 40 - Defining Death

What is the *first* thing that primarily comes to mind when you think of "death"?

I haven’t found any broad-scale surveys that seek to answer which of these definitions of death is most widely held by the general public. If you know of any, feel free to point to them in the comments and I’ll insert a note here.

I would have assumed that the most common definition of death among Cryonicists would be the moment of transition from 'living' to 'dead.' However, surprisingly, it's the state of non-existence, with nearly 50% of respondents identifying that as the first definition that comes to mind.

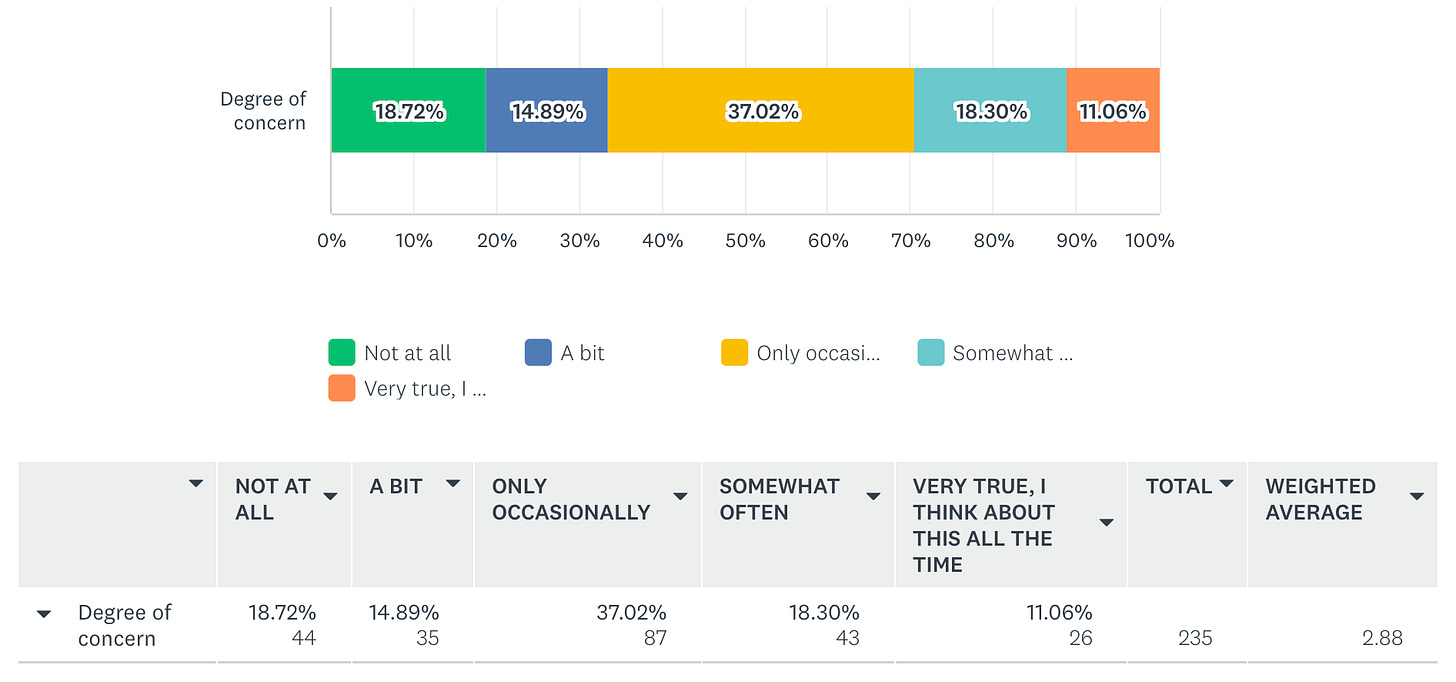

Question 41 - Death Rumination

I frequently think about my own death or the process of dying in very vivid / realistic terms.

There is a belief among some people that Cryonics is composed entirely of people who have a high degree of death anxiety. This question (and the following questions) sought to determine to what extent that was true or false.

Although I don’t know how the general population would answer this question, the findings here suggest that death rumination (and associated death anxiety) are not highly prevalent among Cryonicists, at least, not more than average. But I’m open to correction on this if someone finds some clear data indicating otherwise in the general population.

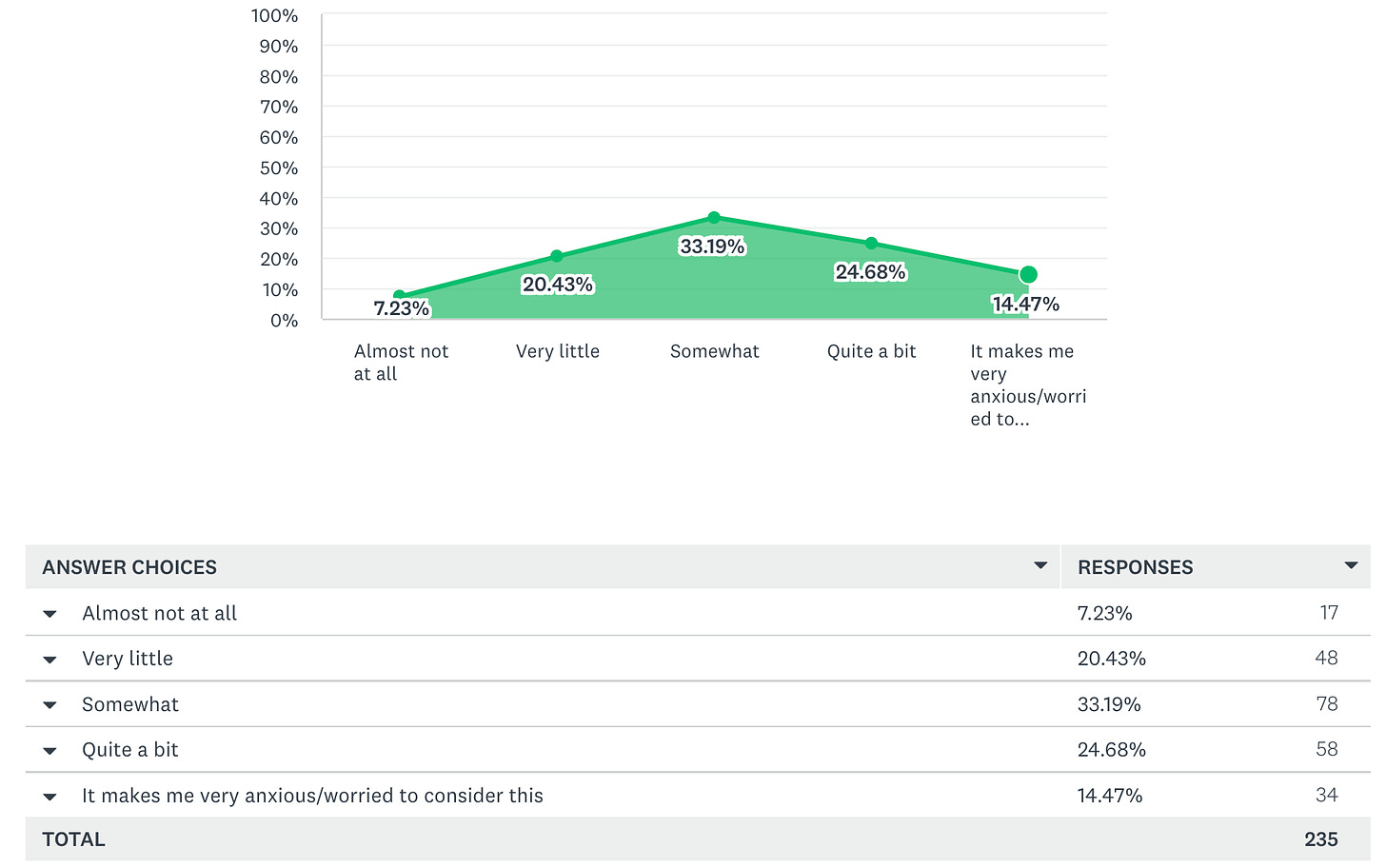

Question 42 - Anxiety Around Dying Process

How anxious does it make you to think about the process of dying?

People seem to have average (dare I say, mostly healthy?) levels of anxiety around this subject.

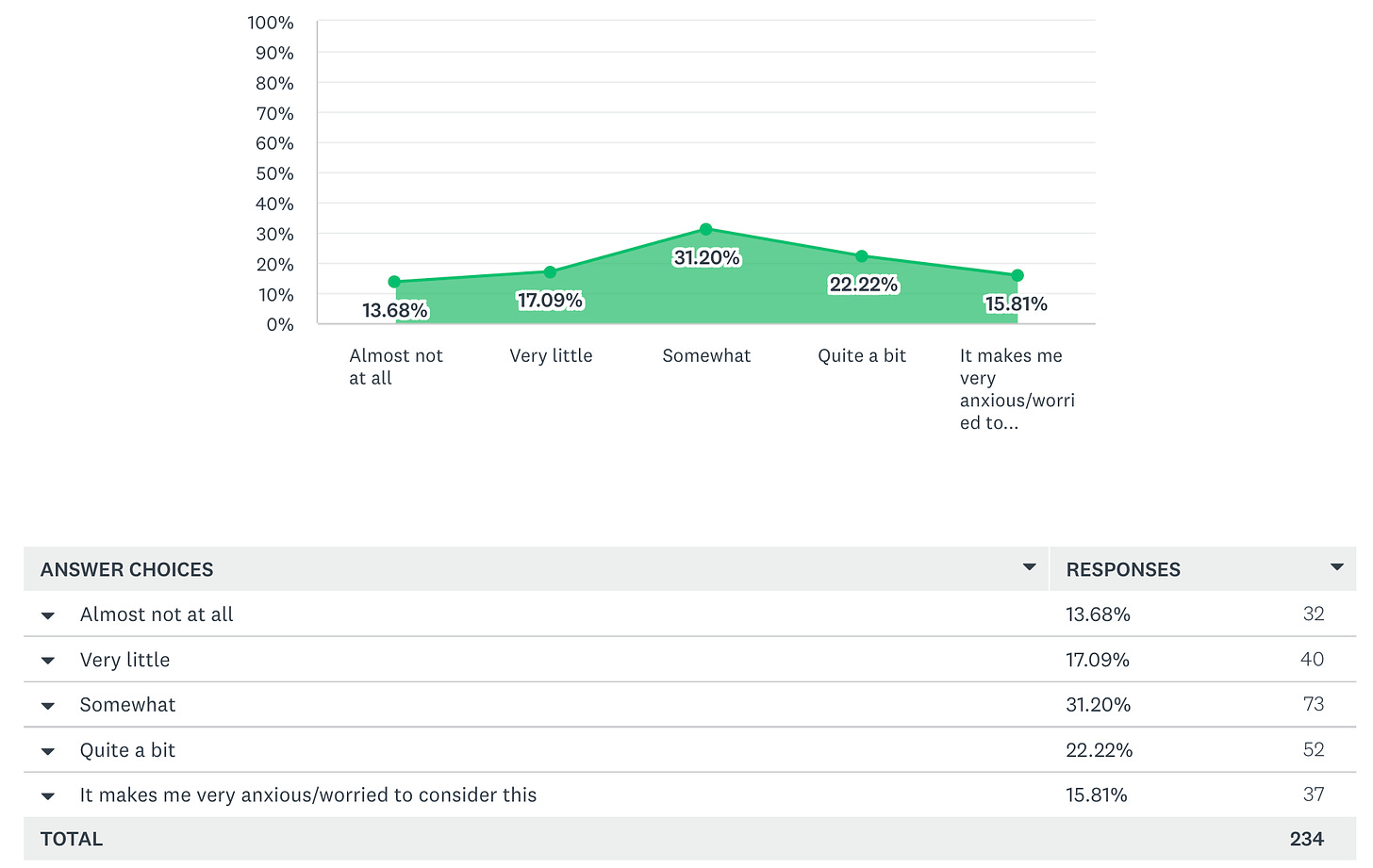

Question 43 - Anxiety Around Moment of Death

Do you feel anxious when you think about the moment you go from being alive to not being alive?

A similar looking curve to the prior question.

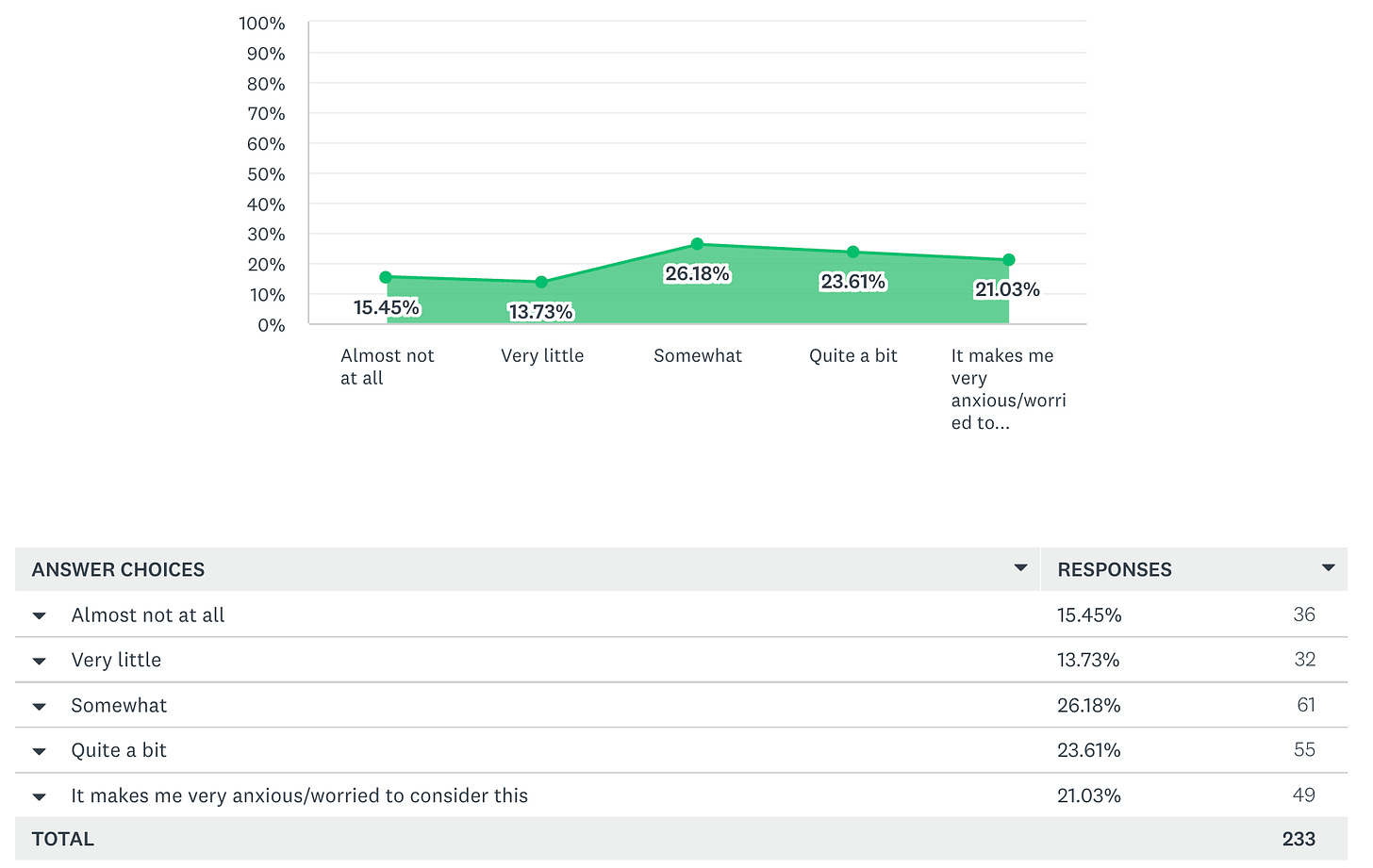

Question 44 - Anxiety Around Non-Existence

When you consider death as the state of non-existence that both proceeds and preceded your life, how worried are you about this non-existence you were and would (or could) be subject to?

Noticeably more anxiety around this one (the “it makes me very anxious”) numbers have gone up.

Question 64 - The Truth Shall Set You Free?

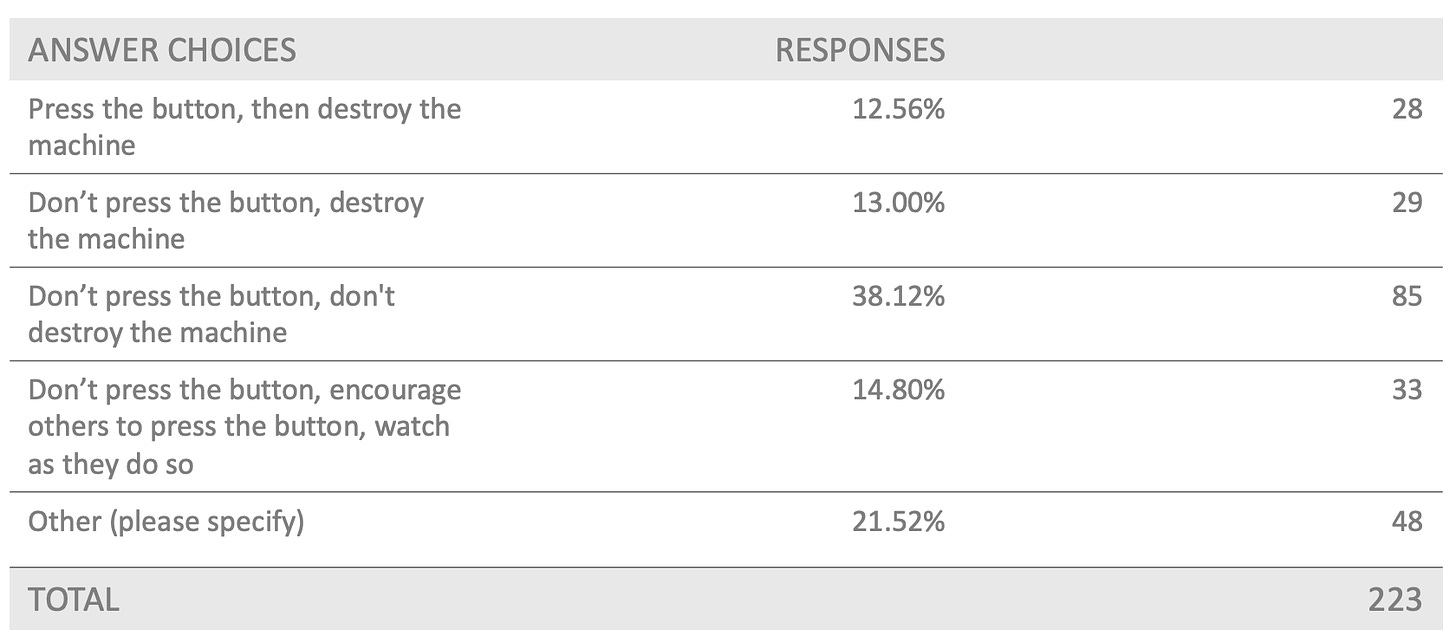

A "magical" machine can tell you one true-fact about the universe each time you press a button. The catch is - that one true fact will be the true-fact calculated to cause you personally the highest possible psychological harm & emotional distress. Do you:

This was a bit of a curveball. When I originally published the survey, I had accidentally omitted the obvious “press the button, don’t destroy the machine” response. Several people told me this question caused them to lose sleep for a night or two after pondering it.

I looked over the written responses, which were quite fun. Here are a few of the more creative ones:

Press the button. Cry. Grow stronger. Press the button again.

Press the button, don't destroy the machine (no such fact would ever cause me *that* much psychological "harm")

Press the button, find volunteers

Press the button as many times as needed until I know everything there is to know (Max note: this is one of those clever “wishing for more wishes” cheat codes!)

Plug my ears, turn on a recording device, press the button, let somebody who is not me hear the recording and decide what to do with the information

Would not go anywhere near it

Quickly looking over most of the other written-in responses, most of them were happy to press the button a lot, over and over.

Wow.. some brave souls! Cryonicists must be high in emotional fortitude, really care about the truth, or … maybe they just haven’t experienced a lot of emotional distress in their lives and don’t know what that sort of pain can be like. At any rate, draw your own conclusions, as always.

Here are a number of philosophically-oriented questions I wish I would’ve thought to ask this time around. They’ll have to wait:

Free Will & Determinism: Are all events 'determined'? Do individuals possess free will? Can both of these concepts coexist (compatibilism), or is that impossible?

Ethics and copies: If you’re a copy (aka. one of multiple simulations of the same mind), should you be held responsible if one of your other copies breaks a law? Should you be accountable to contracts your copy agrees to?

If we agree to a contract stating I’ll pay you $100 today, you’ll pay me $50 in 200 years time (adjusted for inflation), and then you end up being simulated 1,000 different times simultaneously in 200 years… am I owed $50 by one of those simulations, $50 by all 1,000 of those simulations individually, or am I owed nothing by any of them?

The third post in this series on the Cryonics Survey of 2022 will look at how Cryonicists see each other, their cryonics service providers, and other related issues.

Stay tuned for that one.